A Template for a Simple Parallel Program

Overview

Teaching: 0 min

Exercises: 0 minQuestions

What are the main sections of a simple parallel program?

Objectives

Provide a template for a simple parallel program.

Simple Parallel Programs

Simple Parallel Programs - Serial Program Outline

There are typical major sections of a serial computational program.

Part A. Initialization:

- Read input file(s)

- Setting up parameters / precompute some variables

Part B. Work:

- The heavy-lifting computation lies here

Part C. Reporting/saving results:

- Final summary computation

- Reporting results

- Saving output file(s)

Initial assumptions:

- Initiatlization and reporting are assumed to be lightweight (not consuming a lot of CPU cycles)

PROCESS_INPUT_DATA() #Part A: initialization

DO_WORK #Part B: Work

FINALIZE_AND_REPORT_RESULTS() #Part C: Reporting/saving results

Simple Parallel Programs - Parallel Program Outline

There are typical major sections of parallel programs.

Part A. Initialization:

- Reading input file(s)

- Setting up parameters / precompute some variables

- (new) Broadcast parameters to all workers

Part B. Problem decomposition

- Perform the decomposition on the master rank

- Distribute work domains to respective workers

- (Modification exercise for later: Change this so that every worker figures out its own work, so no need to use scatter operation to distribute work domains)

Part C. Parallel work (by all ranks)

- Do the work specified by problem decomposition. The results will be partial results

Part D. (new) Gather partial results back to the master rank

Part E. Reporting/saving results

- Final summary computation

- Reporting results

- Saving output file(s)

Part F. Gathering and reporting/saving final results (by all ranks + master rank)

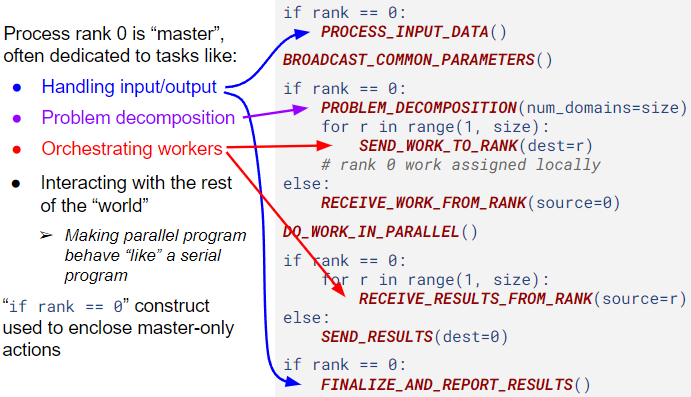

The following is illustrates the simple parallel program template. It uses pseudo-code. Major actions shown in ALL_CAPITAL are pseudo-functions.

# Part A: Initialization

if rank == 0:

PROCESS_INPUT_DATA()

BROADCAST_COMMON_PARAMETERS()

if rank == 0:

# Part B: Problem decomposition

PROBLEM_DECOMPOSITION(num_domains=size)

for r in range(1, size):

SEND_WORK_TO_RANK(dest=r)

# rank 0 work assigned locally

else:

RECEIVE_WORK_FROM_RANK(source=0)

# Part C: parallel work

DO_WORK_IN_PARALLEL()

# Part D: Gather partial results

if rank == 0:

for r in range(1, size):

RECEIVE_RESULTS_FROM_RANK(source=r)

else:

SEND_RESULTS(dest=0)

# Part E and F

if rank == 0:

FINALIZE_AND_REPORT_RESULTS()

Figure: Rank 0 dedicated tasks shown in simple parallel template.

Before You Parallelize

Read the code! Identify parts of the code. What is/are the input/initialization for the data? What are the computations involved? What are the outputs of the code? Run the serial program, notice the overall timing. Which parts of the computation are most expensive?

- Basic: Use a pair of

time.time()calls to measure the timing of a code section - Better: Use code profiler (python module

line_profiler)

Which parts of the computation are most beneficial to parallelize first? Key: How must the data be distributed among workers to enable parallel processing (part of problem decomposition)? Recall that the parallel code requires extra parts mainly for coordinating work, data movement among workers, etc.

Key Points

Provide a commonly occurring template for a parallel (computational) program.

Identify additional pieces in a code as a result of parallelization.

Provide a common pattern & workflow of parallelization of a program.