Introduction to Parallel Programming

Overview

Teaching: 20 min

Exercises: 0 minQuestions

What is and why parallel programming?

What are the different parallel programming models?

What are some parallel programming standards?

Objectives

Get an overview of parallel programming.

Distinguish different parallel program models.

Get an idea of challenges of writing a parallel program.

Introduction

Parallel programming is an arcane field that for a long time was limited to scientific computing, i.e. to the people who use computers to perform numerical computations and simulations for science and engineering. As of today, parallel programs have powered real-time weather forecasting, high-accuracy storm and hurricane predictions, understanding important cellular processes in diseases, design of new materials, drugs, jet engines, jet planes, and many more. All these computations share one or two things in common: They require high-end computing hardware because the required amount of computation is too much for a single computer to handle; or, the size of the data is too big for a single computer to fit.

Parallel computing requires hardware that has more than one processing unit (a single computer or a set of connected computers) and specialized programming techniques (with libraries or language). Such hardware used to be uncommon, but since mid-2000s, commodity processors have been produced with multiple processor cores (simply called “cores”), each of which is capable to perform an independent sequence of computations. This trend continues today, even accelerates; thus brings “parallel computing” to the mass. Virtually every server, workstation, desktop and laptop computers, tablets and even smartphones produced today are “parallel computers”. Not only they have traditional processors called central processing unit (CPU), they may contain one or more graphics procesing units (GPUs) and other types of processors. GPUs, in particular, as a massively parallel processor, play a crucial role in accelerating scientific simulations and deep learning (which is the prevailing implementation of “artificial intelligence” today). These factors underscore the importance of parallel programming.

What is parallel programming? It is a programming technique which entails the use of multiple processors to solve a single problem in less time. There are many modalities of parallel programming that are in use today. In this module, we will focus on one modality, which is the distributed-memory parallel programming. We will introduce Message Passing Interface (MPI), a library widely used library for distributed-memory parallel programming.

Why Parallel Programming?

Parallel programming allows the user to greatly reduce time-to-solution

by splitting the problem among a number of worker-processes.

In other words, parallel programming allows the user to solve a computational problem using multiple computing resources.

It allows the programmer to take advantage of modern architectures composed of multiple processors.

Workers can process different chunks of data simultaneously.

Parallel programming is done on parallel computer architectures such as a cluster computer like Turing, and can also be done in today laptops and desktop computers with multi-core CPUs.

Parallel programming is used when a problem is too big or takes too long to solve.

Parallel programming minimizes the runtime, or time to solution for problems that deal with big data and/or big computations.

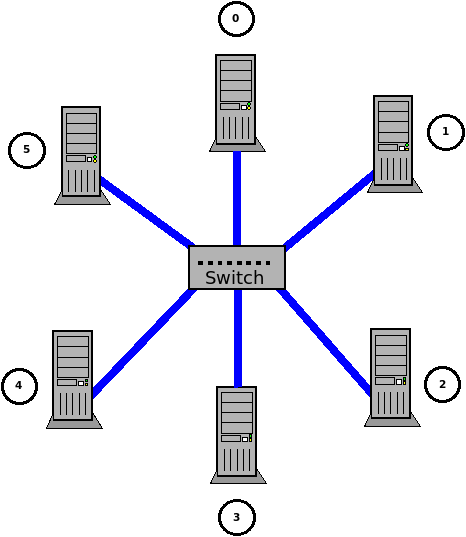

Figure 1: A simplified illustration of a parallel computer as a set of networked computers.

In single processor computers, the user is limited to the memory and computing power of the machine. In parallel computers, many computing resources are pooled together, thus multiplying the computing power; while also increasing memory capacity, thus enabling the processing of larger and complex problems. Today, parallel computers (see Figure 1) still have networked architectures, but they feature faster networks and faster CPUs. Within one of the units of the networked computer, also known as compute node, resides one or more multi-core processor(s). Each multi-core processor has its own memory, independent of other multi-cores, and independent of other compute nodes. This architecture provide the user with a powerful computing platform, that when utilized optimially, will solve larger and more complex problems faster.

Power of Parallel Computing Example (Scaling)

Figure 2: Oversimplified example of the power of parallel computing.

1 worker completes a task in 6 hours. 2 workers complete in 3 hours. 4 workers complete in 1.5 hours. Given that there are N workers (and this pattern of speedup continues), how quickly can we complete the task if we implement parallelism?

How quickly can 400 workers finish the task?

Hints

In this overly simplified example, assume linear speedup continues. N workers complete the task in 6/N hours. So, 400 workers will finish in 54 seconds! 6/400x60x60 = 54. Note, this is assuming ideal linear speedup, which is not possible to achieve given restraints explained later in the lessons.

High-Level Strategy for Parallel Programming

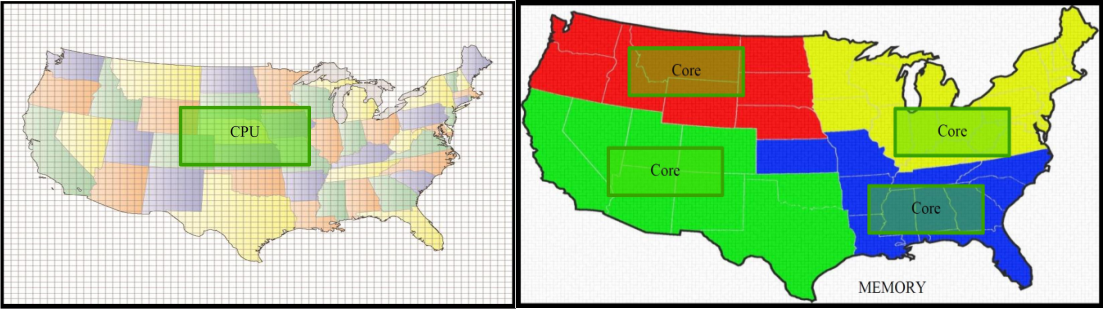

Consider the following problem: calculate the weather of the US. Calculating the weather for any region requires very computationally expensive computations. This problem requires parallel programming because this is a time-sensitive computation. Calculating the weather needs to be fast so the reporting of the weather is current. Calculating the weather requires the program to learn on past weather data and use it to predict the next future weather values. The learning (and subsequent predicting) of the data is independent based on region.

In serial, calculating the weather of the US uses one CPU to make all the computations necessary. The program will one-by-one perform the learning and predicting tasks for each of the regions.

This can be solved in parallel by mapping a computer resource/core to a particular region of the US. This makes solving the weather for the US faster. As explained later in the module, this is a data parallel problem that utilizes shared-memory architecture.

Figure 3: Left: an example of solving the weather example in serial using one CPU. Right: solving the weather example in parallel. Figures from Chen, Shaohao. “Introduction to High Performance Computing.” Boston University. https://www.bu.edu/tech/files/2017/09/Intro_to_HPC.pdf.

Figure 3: Left: an example of solving the weather example in serial using one CPU. Right: solving the weather example in parallel. Figures from Chen, Shaohao. “Introduction to High Performance Computing.” Boston University. https://www.bu.edu/tech/files/2017/09/Intro_to_HPC.pdf.

The high-level strategy is to divide a spatial problem into regions. Then, map each region to a computer resource. Then, figure out how to take care of the interactions between regions/resources.

What Is a Parallel Program?

A parallel program could be defined as computer code with the capability to carry out computations simultaneously using more than one process or thread (both known as workers). This means that a single launch of the program will spawn a specified amount of processes to solve a given problem. So far, most of the programs we dealt with have been written to run on a problem specific platform handling the parallel processing, but the program codes have so far been serial.

What is the difference between a parallel program and traditional serial program? At any given time, there is only one running worker solving the problem in sequential order. A parallel program on the other hand spawns multiple workers which, depending on the situation, can be concurrently and independently working on a given aspect of the problem or doing the exact same operation as peer workers but on its own set of data. Multiple processes (workers) live in the same time frame. More on the differences between serial and parallel programs next.

Type of Parallel Problems - Loosely vs. Tightly Coupled Problems

Loosely coupled

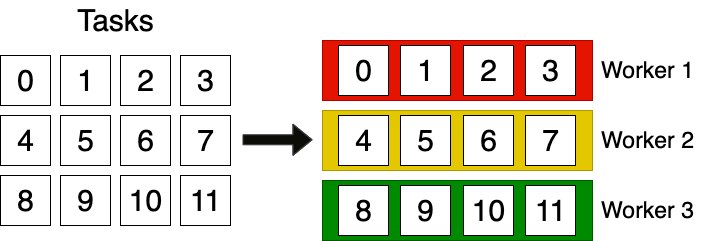

In Module 1, we explored a “loosely coupled problem” - processing 1998 spam set using many independent workers to work in parallel on independent tasks (with no communication except for initial division of labor and final summary). Loosly coupled problems execute tasks independently to many workers with little communication besides the beginning and end of all tasks. In principle, there is no required parallel programming framework necessary. They are trivial to parallelize.

Figure: Example of a loosely coupled parallel problem.

Tightly coupled

In this module, we will focus on “tightly coupled problems.” A single problem solved by many (tightly coupled) workers. For tightly coupled problems, parts of the task can be executed in parallel, but there exists a strong interdependence among tasks. Cannot just “farm out” and then gather results at the end. Communication and coordination among workers are required throughout. Communication and coordination is needed (from “somewhat frequently” to “very frequently”). This communication and coordination is managed through a parallel programming framework (ex. MPI).

Figure: Tightly Coupled Parallel Problems.

Parallelism: Loosly and Tightly Coupled Problem

Consider the following problems. Classify them as loosly or tightly coupled problems.

- Simulation of motions of drug molecules in a biological system (cell, or tissue).

- Counting votes in a national election.

- Automotive factory - building a car.

- Weather problem as stated above.

Hints

Motion of drug molecules: Tightly coupled because the motion of one drug molecule can influence the motion of another drug molecule. An example is if the drug molecules collide.

Counting votes: Loosly coupled. There is coordination in assigning a different section of the country to each vote counting resource. Each vote counting resource counts only it’s assigned region. Coordination is needed at the end to collect the sum of all the votes from each vote counting entity.

Building a car: Tightly coupled. Several tasks can be run in parallel, but communication and sharing of parts is necessary to complete the assembly. For example, the following tasks can be run in parallel: Parallel Task I: assemble parts of the main frame, engine, doors, etc. Parallel Task II: install the doors, seats, dashboard, wheels, tires, etc. to the main frame Parallel Task III: Install the wiring for door components and paint the car.

Weather: Loosely coupled. Based on how the problem was described above (super high-level), only mentioning the learning and predicting phase only, it is loosely coupled. It require the initial mapping of computing resources and the final reporting.

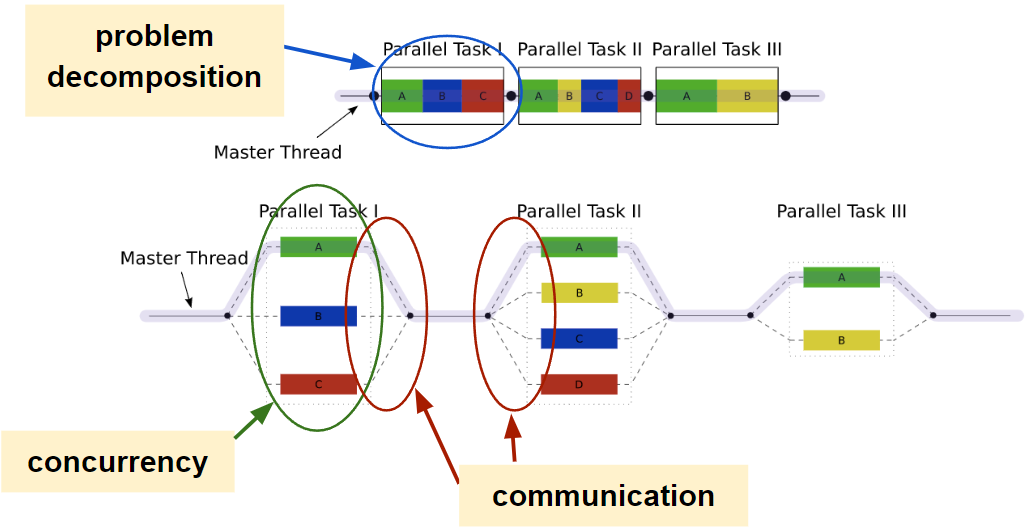

There are three main key components of (tightly coupled) parallel programming. Component 1 is problem decomposition. Split the problem into parts that should be able to process independently (to some extent). This is usually done by the user. Component 2 is concurrency. The idea that multiple workers can work on independent tasks simultaneously. Parts of the work can be done simultaneously. Component 3 is communication and coordination. A data exchange among workers is necessary to solve the problem. Data sharing is handled through parallel frameworks (such as OpenMP and MPI).

Figure: Key components of parallel programming.

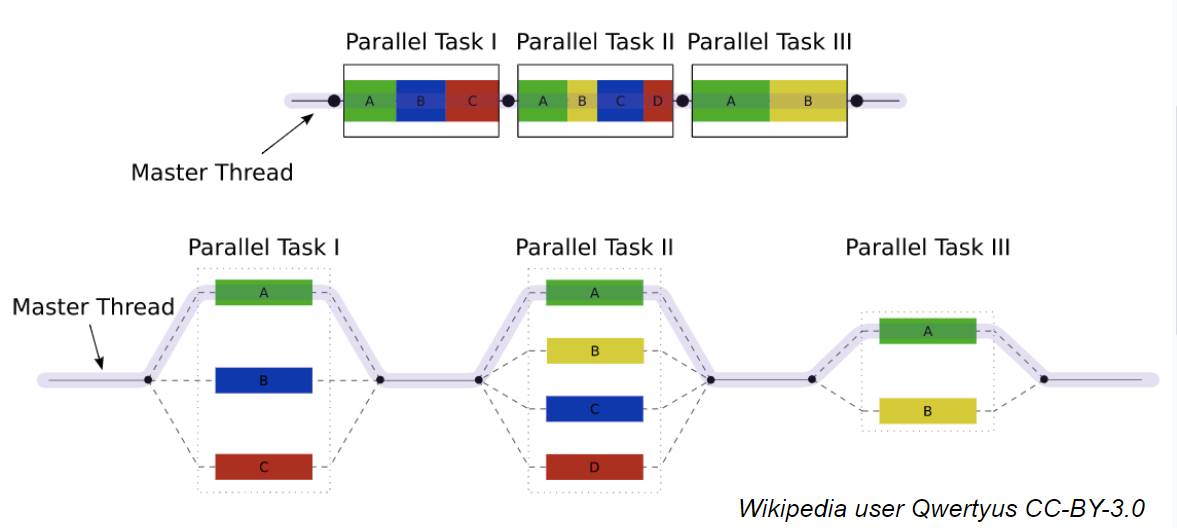

Consider the car assembly problem. The problem can be decomposed into Parallel Task I: assemble parts of the main frame, engine, doors, etc., Parallel Task II: install the doors, seats, dashboard, wheels, tires, etc. to the main frame, and Parallel Task III: Install the wiring for door components and paint the car.

Each of the sub-tasks in each of the three parallel tasks can be done concurrently. This can be done on independent tasks that require no coordination or communication. For example, assembling the main frame, engine, and doors can be done concurrently.

Parallel Task II has to wait on Parallel Task I, since the doors, seats, dashboard, wheels, tires, etc. cannot be installed on the main frame without the main frame first being assembled. This requires coordination and communication between the tasks - Parallel Task I needs to share the assembled main frame. Likewise, the wiring for the doors can only be done in conjunction with the doors that were installed in Parallel Task II.

Key Points

Motivation for parallel programming: too big or too long

Parallel computing definition: using multiple computing resources to solve a bigger computational problem

Loosely and tightly coupled problems

Key components of tightly coupled parallel programming: problem decomposition, concurrency, communication and coordination