Serial and Parallel Programming

Overview

Teaching: 20 min

Exercises: 0 minQuestions

What are computing resources (hardware)?

What is serial computing and programming?

What is parallel computing and programming?

What memory schemas are available for parallel computers?

Objectives

Learn about computing resources for serial and parallel programming

Learn about shared and distributed memory models

Introduction

In this section we will present a simplified concept of serial and parallel computers, as well as how the parallel programs differ from the serial programs. Understanding the similarities and differences of the two kinds of computers and programs is crucial to grasp the specific features related to parallel programming.

At the fundamental level, the vast majority of “computers” today follow the architecture designed by John von Neumann, which are essentially composed of the following parts:

- one or more processing units;

- working memory;

- input device;

- output device;

- long-term data storage.

Serial Computing and Serial Programs

Components of a Serial Computer (Hardware)

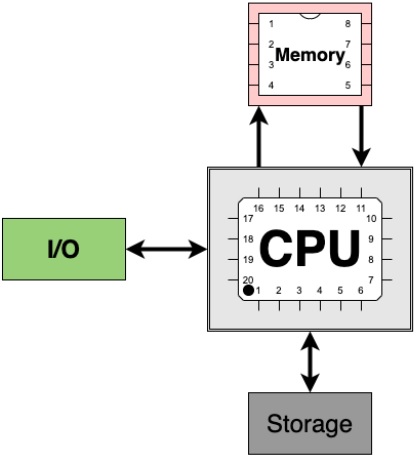

The major parts of a serial computer are CPU - the “brain”, memory - for working data, storage - long-term/persistent data, and input/output - interaction with user. This is a gross oversimplification. Today’s computers are much more complex. They consist of multicore CPUs and complex memory hierarchy, layout, etc.

Figure: Serial computer major components.

Serial Programs

A simple model consists running “code” (set of instructions) i.e. program that is run on one CPU. One “process” consists of three major components. The first is a set of instructions (“code”). The second is the execution state. The execution state is where we are in the execution of the program. The instructions are executed sequentially by the CPU. There is only one processor that runs the program. The third is the data to be operated on and manipulated by the code, which is stored in memory. It consists of the values of variables as well as the working data. Both are stored in memory. The fourth is optional, and is the interation of the storage with the user.

Serial Program Example

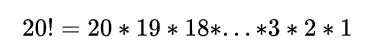

Calculate 20! (20 factorial).

Mathematically,

#!/usr/bin/env python3

result = 1

for i in range(2,21):

result *=i

print(result)

Parallel Computing and Parallel Programming

A parallel computer consists of many serial “computers” (or “worker”) that are “connected” to be able to work together in a singular “system.” Interconnection/network/mesh provides a direct link between computers. Each “worker” can run one task. All of the tasks must have a way to communicate and coordinate with each other. There are two main memory organization/model types: shared memory and distributed memory.

A parallel program is the totality of all the mutually communicating “serial” processes/tasks.

Shared Memory Model

The first model is called a shared memory model.

It consists of one running process. The parallelization is done

by multiple threads (or “workers”) that run simultaneously.

Data resides in a shared memory space.

All of the threads can access the same data.

This means any worker has access to the memory space allocated to the problem.

Because the workers access the same memory space, care must be

taken to prevent race conditions - which is when threads write and read to the same region of data simultaneously.

No explicit communication is required since the workers will

access the same memory space.

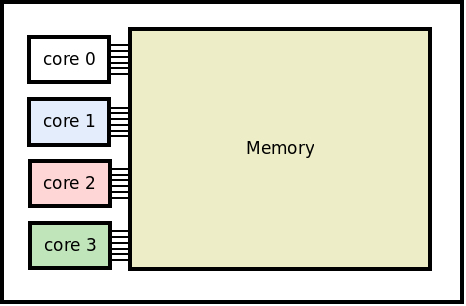

On a physical computer, each worker is hosted by a CPU core, for example on a multi-core CPU.

The shared memory is the corresponding main memory such as RAM.

Because the memory is shared, this model tends to be implemented on a single node with multi-core CPUs.

Parallelism is specified through cpus-per-task. As explained later, issues of collisions and race conditions can occur if not properly mitigated/managed. POSIX Threads (Pthreads) and OpenMP are standard for implementing multi-thread (parallel) programming. This model is limited by the number of cores sharing the same memory.

Note that this model is not the main focus of this module.

Figure: Shared memory multi-core processor.

Distributed Memory Model

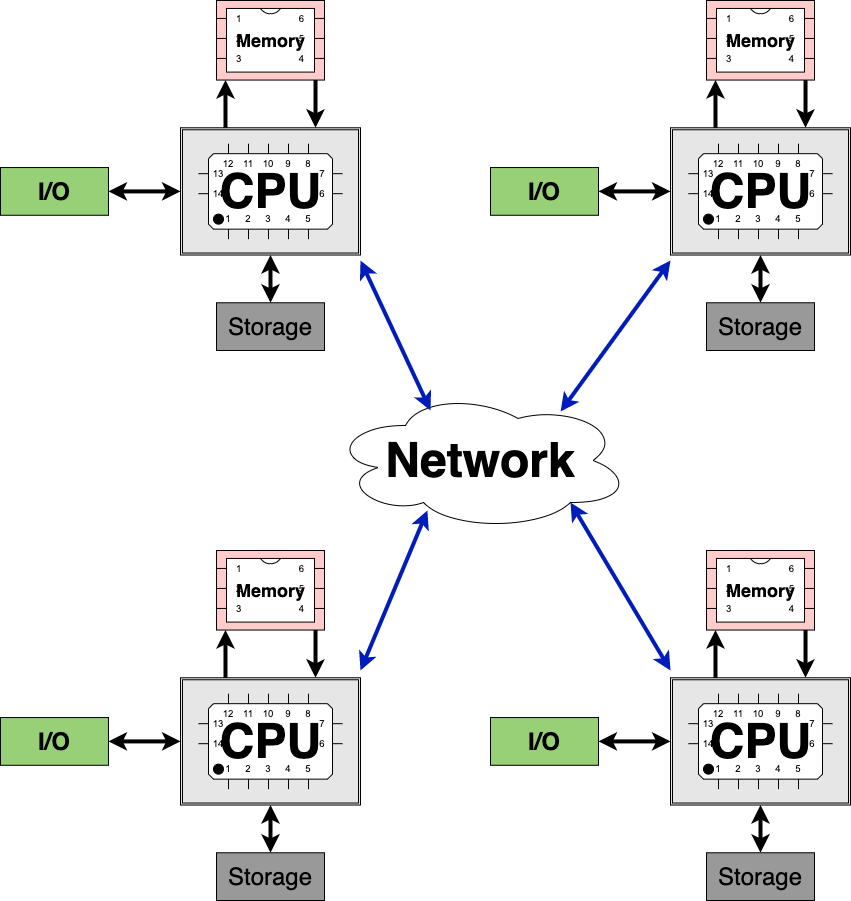

The second model is the distributed memory model. This model can be implemented on cluster computers with several compute nodes such as Turing, but also can be done on a multi-core single node computer.

Here, workers each have their own chunks of memory which only they can access. Message passing interfaces, such as MPI, allows the sharing of data between workers.

In this model, workers are typically connected through a computer network. Workers send and receive messages through the network if they are located on different nodes (inter-communication). The network is not used in the case where all workers are hosted in one computer. The bandwidth of the network is very important in determining the speed of communication and thus impacts problem solving.

Parallelization using distributed memory is typically used when aggregating intermediate results (runs on multiple processes in parallel) to produce a single result. Data is exchanged among tasks through explicit communication (send, receive, etc.).

Figure: Distributed memory model.

Shared Memory vs. Distributed Memory Models

Recall the weather example.

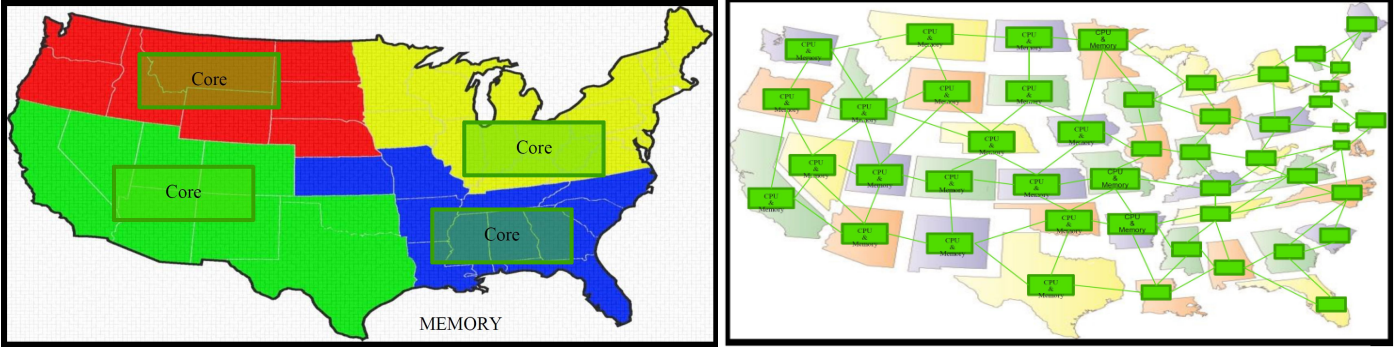

Figure: The left side solves the weather example using shared memory. The right side solves the weather example using distributed memory. Figures from Chen, Shaohao. "Introduction to High Performance Computing." Boston University. https://www.bu.edu/tech/files/2017/09/Intro_to_HPC.pdf.

Recall: Parallel Programming

In single processor computers, the user is limited to the memory and computing power of the machine. In parallel computers, many computing resources are put together, thus increasing the computing power, while also increasing memory capacity, thus enabling the processing of larger and more complex problems. Today, parallel computers still have networked architectures, but they feature faster networks and faster CPUs. Within one of the units of the networked computer, also known as compute node, resides one or more multi-core processor(s). Each multi-core processor has its own memory, independent of other multi-cores, and independent of other compute nodes. This architecture provide the user with a powerful computing platform, that when utilized optimially, will solve larger and more complex problems faster.

Key Points

Serial computer’s major parts: CPU, memory, storage, Input/Output.

Serial programming: one process per cpu in sequential order.

Parallel computer: connected multiple computers that can coordinate and communicate.

Shared memory: utilizes multi-core threading (within one node).

Distributed memory: utilizes explicit message passing to exchange information between processors.