Using HPC for Parallel Processing

Overview

Teaching: 15 min

Exercises: 15 minQuestions

How do we run computational jobs in parallel on a modern HPC system?

What are key issues to watch out to achieve efficient parallel execution on HPC?

Objectives

Perform manual parallelization of a series of jobs to run on HPC.

Understand key issues that must be addressed to maximize the benefit of parallelization.

Parallelization: Reducing Time to Solution

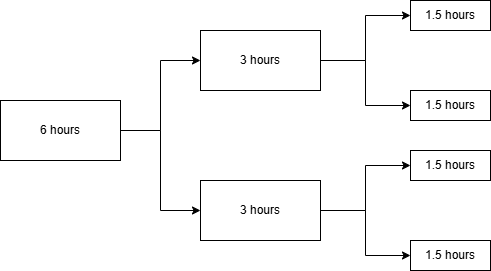

To reduce the time-to-solution through parallelization, tasks are divided among multiple workers and executed concurrently. This process can be visualized in the following diagram.

Ideally, the time it takes to complete a task with parallelization, known as time-to-solution, can be calculated as T(one work) / N, where N represents the number of workers. This means that as the number of workers increases, the time to solve the task should decrease, assuming the task can be efficiently divided among them.

Speeding Up Spam Analysis: Manual Splitting and Parallelization

To manually partition the year1998.slurm file into two separate jobs,

year1998a.slurm and year1998b.slurm, follow these steps.

Then, repeat the process for the years 1999 and 2000.

Step-by-Step Instructions

create a directory for split files(x2 means 2 parallel files):

mkdir year1998_x2

Create a copy of the original file for the first job (1998a):

cp year1998.slurm year1998_x2/year1998a.slurm

Edit year1998a.slurm to include data from March to July:

nano year1998a.slurm

Make sure to remove or comment out any data not from March to July.

Create a copy of the original file for the second job (1998b):

cp year1998.slurm year1998_x2/year1998b.slurm

Edit year1998b.slurm to include data from August to December:

nano year1998b.slurm

Make sure to remove or comment out any data not from August to December.

Repeat this process for the year 1999 and 2000.

If it hasn’t been done previously, you can also run the year 2000 job using just one worker.

To further optimize, run the year 1999, 2000 job with two workers, dividing the workload equally between the months.

Continue to paralle

Continue this method by running the year 2000 job with four workers, and then with six workers, each time splitting the workload into identical numbers of months for each worker.

To analyze the scaling performance of your computations using multiple workers, you can extract and plot the speedup achieved through parallelization.

For example, we can extract the time from year1998_x2 OUTPUT files:

cd year1998_x2

grep 'Total time' slurm-*.out

Then, input the time u extract from different output files into the form. you should copy this google sheet to your own.

Issues with Load Balancing

In parallel computation, the effect of load imbalance is significant as the longest-running process can weigh down the overall performance of the system. This occurs when one or more tasks take significantly longer to complete than others, causing the faster tasks to idle while waiting for the slowest one to finish.

To mitigate the load imbalance, one effective strategy is rebalancing. This involves redistributing the tasks more evenly among the available processors during the computation. Rebalancing can be dynamic, adjusting the task distribution in real-time based on workload, or static, determined before the computation starts based on predicted task durations.

For learners exploring parallel computation, particularly in tasks like analyzing the origin of spam emails, it’s beneficial to experiment with alternative methods of splitting the workload. Instead of splitting tasks by fixed amounts (like time intervals or predefined sets of data), learners can try more adaptive methods that account for variability in task complexity or size. This approach helps ensure that all processors finish their tasks closer in time, enhancing the efficiency of the parallel computation.

Key Points

A large job can be split into smaller jobs to reduce the time to solution.